Feedforward Deep Neural Networks

This page includes ⊕ my Chapter notes for the book by Michael Nielsen.

chapter 4: a visual proof that neural nets can compute any function

chapter 5: why are deep neural networks hard to train?

chapter 6: deep learning

notes

- insight is forever

- his code is written in python 2.7

- emotional commitment is a key to achieving mastery

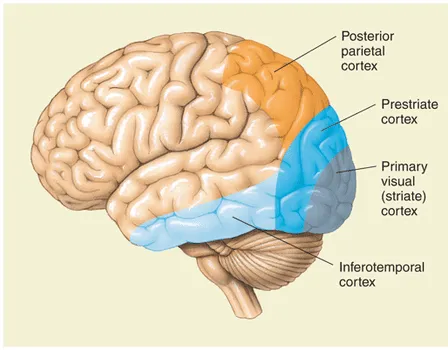

- primary visual cortex has 140 million neurons

- two types of artificial neuron: perceptron, sigmoid neuron

- perceptron takes binary inputs and produces a single binary output.

- perceptrons should be considered as making decisions after weighing up evidence (inputs)

- neural nets can express

NAND, which means any computation can be built using these gates!

sigmoid neurons

- you want to tweak the weights and biases such that small changes in either will produce a small change in the output

- as such we must break free from the sgn step function and introduce the sigmoid function

\(\leadsto\)

thus the mathematics of \(\varphi\) becomes: \[\begin{align*} \sigma(z) &\equiv \cfrac{1}{1+e^{-z}}\\ &\implies \cfrac{1}{1+\text{exp}(-\sum_j w_jx_j -b)} \end{align*}\]