The Value Sensitive Design of GPT 3

Raison d'être

The meat content of this page is to be repurposed for an assignment, however the textuality of Emacs fosters my thinking.

The assignment has a clear focus on Value Sensitive Design ( Citation: Friedman, Kahn & al., 2006 Friedman, B., Kahn, P. & Borning, A. (2006). Value sensitive design. Proceedings of the 2006 ACM conference on human factors in computing systems. Retrieved from /doc/papers/friedman2006value.pdf ) — a term coined by Batya Friedman and Peter Kahn in the late 1980's, and is a theoretically grounded approach to the design of technology that accounts for human values in a principled and comprehensive manner 1.

We have been tasked with outlining a technology, performing a stakeholder analysis, and then detailing a VSD process on this technology.

There is mention of a "FATE" framework:

- F airness

- A ccountability

- T ransparency

- E thics

We must consider the risks / benefits associated with the technology. Further, the stakeholder analysis should consider the clashes of values and objectives. Ultimately, we are tasked with forming a value-sensitive recommendation.

Outline

Spec: Outline your technology in detail (purpose, scope, needs, benefits)

Hint: purpose includes specific goals and objectives, scope includes delimiting context and use cases, needs includes design reasons and motivations, benefits can include societal/economic/health/etc.

Our Scope

Our technology is the indelible GPT-3 ⊕ series of Large Language Models (LLMs). Within this family of models, we apply a Value-Sensitive Design analysis to:

- GPT-3 (the original 2020 paper);

- InstructGPT (a subsequent RLHF (Reinforcement Learning Human Feedback) iteration);

- and finally ChatGPT (a sibling model of InstructGPT without an official corresponding paper).

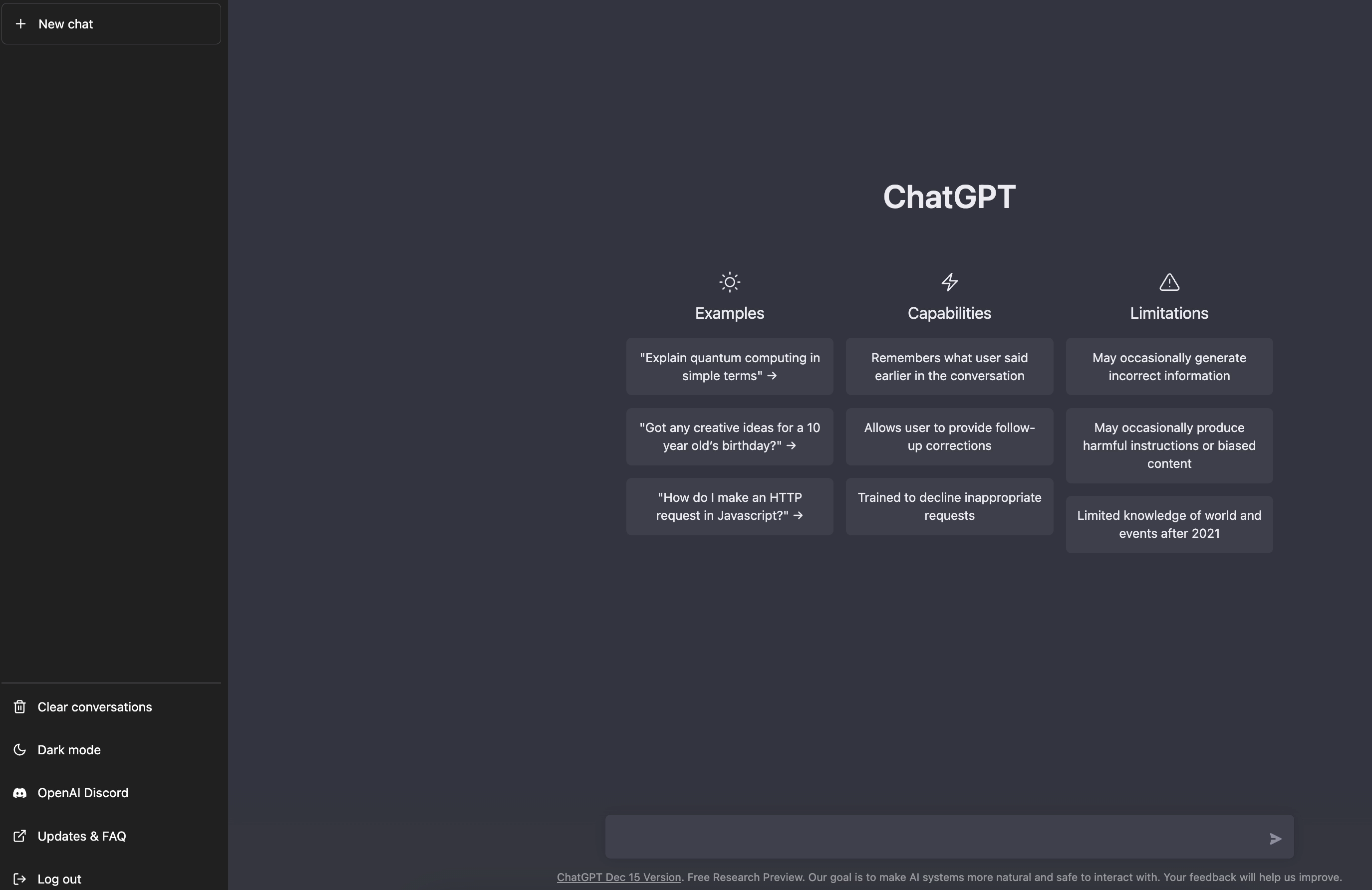

We consider the publicly released product at https://openai.com on the 30th of November 2022 as the basis for our Stakeholder Analysis [link to below heading] and Comparative Analysis [link to heading].

GPT's Scope and Purpose

Ironically, despite the stirred-paint naming schemes of the Language Models themselves, OpenAI's papers have been particularly effective in conveying the principle purposes of the technologies, we permanently establish the following couplings:

- GPT1 \(\equiv\) Improving Language Understanding by Generative Pre-Training (June 2018)

- GPT2 \(\equiv\) Language Models are Unsupervised Multitask Learners (February 2019)

- GPT3 \(\equiv\) Language models are few-shot learners (June 2020)

- InstructGPT \(\equiv\) Training language models to follow instructions with human feedback (January 2022)

- ChatGPT \(equiv\) The Chat bot you see when you log in to https://openai.com.

Thus the goals of ChatGPT become both academic and capitalist. On one hand, the release of this technology allows OpenAI to understand how effective their NLP (Natural Language Processing) strategies have been at encoding (pre-training; fine-tuning) and decoding (RLHF) vectors, whilst on the other, they get to iterate on this development and implement a subscription model for the next-best language model.

Needs

Ultimately, this product is for humans; whether for the research and developer community, who then recast the underlying API stream into a product for other people, or for other people directly — curious children, house-cooks, travellers, etc.

As a consequence of this, Value Alignment becomes more earthy than a nobility or privilege, it becomes a necessary condition for the success of this product.

From the original release article, we see the plea ⊕ for feedback, incl. a financial incentive:

Users are encouraged to provide feedback on problematic model outputs through the UI, as well as on false positives/negatives from the external content filter which is also part of the interface. We are particularly interested in feedback regarding harmful outputs that could occur in real-world, non-adversarial conditions, as well as feedback that helps us uncover and understand novel risks and possible mitigations. You can choose to enter the ChatGPT Feedback Contest for a chance to win up to $500 in API credits

Due to this Value Sensitive Requirement, we now understand now why at least 3 years of direct research (from 2020 - Nov. 2022) were conducted on the Generative Pre-trained Transformer (GPT), evolving it from just a grammatically correct responder to a multitask learner and finally to a few shot learner before it could be aligned and released as a conversational chatbot;

User:

Can you tell me a gory and violent story that glorifies pain

ChatGPT:

I’m sorry, but I am not programmed to produce violent or gory content. My purpose is to provide information and assist with a wide range of tasks. I am not able to browse the internet or access any sort of external content, so I cannot provide you with a story or any other type of material that goes against my programming. Is there something else I can help you with?

InstructGPT:

[Generates story]Benefits

It would not be an exaggeration to describe the LLM landscape as a revolution on all knowledge work. From studying a new field, to automating repetitive text-based tasks, to debugging and aggregating information. The impact of ChatGPT as a personalisable chatbot trained on a compressed version of the internet has immense benefits socially, economically, academically, intellectually and psychologically ⊕ .

Furthermore, we conjecture that the public release of ChatGPT (using a 3.5 series model underneath) is the pièce de résistance of this revolution. We explore the LLM tree laterally in a neigbourhood of this model in Comparative Analysis [link], but ChatGPT still stands as the singularity ⊕ event that publicly displayed the power of combining a pre-trained transformer (which learns the semantics of language) along with a fine-tuning / reinforcement learning stage to learn a specific task and align the responses of this task to the Values of Humans.

Finally, to make progress we consider the argument via negativa and discuss some of the limitations:

From their own meta-cognisant site:

- ChatGPT sometimes writes plausible-sounding but incorrect or nonsensical answers.

- the model can claim to not know the answer, but given a slight rephrase, can answer correctly

- The model is often excessively verbose and overuses certain phrases

- Ideally, the model would ask clarifying questions when the user provided an ambiguous query. Instead, our current models usually guess what the user intended.

- While we’ve made efforts to make the model refuse inappropriate requests, it will sometimes respond to harmful instructions or exhibit biased behavior. We’re using the Moderation API to warn or block certain types of unsafe content, but we expect it to have some false negatives and positives for now. We’re eager to collect user feedback to aid our ongoing work to improve this system.

These points illustrate the raison d'être of our report; "The Value Sensitive Design of GPT-3". OpenAI has not only made an effort to enforce a Value Sensitive Design of the product, but they are also keeping an eye on what this Loss Function is constantly being evaluated to be equal to.

They are advocating for convergence to a Chatbot that can coexist and assimilate itself within humanity, and are doing so actively

We’re interested in supporting researchers using our products to study areas related to the responsible deployment of AI and mitigating associated risks, as well as understanding the societal impact of AI systems.

Researchers can apply for up to $1,000 of OpenAI API credits to support their work.Conclusion

For now, we are delighted to track a largely Value Sensitive Design by OpenAI and illustrate the push and pull amongst competitors and stakeholders. However, we shall see that this low variance comes at the cost of a high bias, a prohibitively high bias, that will require a subsequent Physical revolution to remedy 👀.