Deep Learning

vs. stable diffusion. EXPERIMENTS

We have seen what can be learned by the perceptron algorithm — namely, linear decision boundaries for binary classification problems.

It may also be of interest to know that the perceptron algorithm can also be used for regression with the simple modification of not applying an activation function (i.e. the sigmoid). I refer the interested reader to open another tab.

We begin with the punchline:

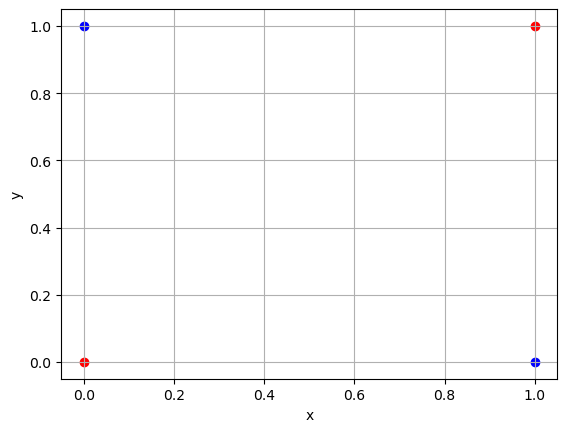

XOR

Not linearly separable in \(\mathbb{R}^2\)

Backlinks (3)

1. Literate Programming and Donald Knuth /blog/literate-programming/

I was first introduced to this concept by Distrotube (Derek Taylor’s) “literate config” files. At the time I was not using emacs and thus all the code I was writing was sparingly commented.

Since then, I have entered a world of Machine Learning and Deep Learning, where suddenly in 4 lines, I can sit atop my high-horse and perform sentiment analysis with tensorflow and keras!

from transformers import pipeline

classifier = pipeline('sentiment-analysis')

prediction = classifier("Donald Knuth was the greatest computer scientist.")[0]

print(prediction)

In such an age of abstraction complexity, it becomes paramount to distill what is happening at the last few \((n-k)\) layers.

2. Wiki /wiki/

Knowledge is a paradox. The more one understand, the more one realises the vastness of his ignorance.