chapter 1: using neural networks to recognise handwritten digits

notes

- insight is forever

- his code is written in python 2.7

- emotional commitment is a key to achieving mastery

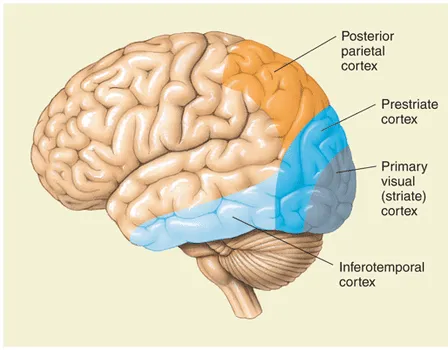

- primary visual cortex has 140 million neurons

- two types of artificial neuron: perceptron, sigmoid neuron

- perceptron takes binary inputs and produces a single binary output.

- perceptrons should be considered as making decisions after weighing up evidence (inputs)

- neural nets can express

NAND, which means any computation can be built using these gates!

sigmoid neurons

- you want to tweak the weights and biases such that small changes in either will produce a small change in the output

- as such we must break free from the sgn step function and introduce the sigmoid function

thus the mathematics of \(\varphi\) becomes: \[\begin{align*} \sigma(z) &\equiv \cfrac{1}{1+e^{-z}}\\ &\implies \cfrac{1}{1+\text{exp}(-\sum_j w_jx_j -b)} \end{align*}\]

and since the output = \(\sigma(w\cdot x + b)\) \[\Delta \text{output} \approx \sum_j \frac{\partial \text{output}}{\partial w_j}\Delta w_j + \frac{\partial \text{output}}{\partial b}\Delta b \] where \(\Delta\) is change, not the gradient / nabla!

- the input ound output layers of a neural network are straight-forward, there is an art to the design of the hidden layers.

-

feedforward neural networks means there are no loops in the network.

- recurrent neural networks have loops

gradient descent

- the mnist training dataset was composed from 250 people, half of whom were US Census Bureau employees, and the other half of whom were high school students.

- the testing data was taken from a 250 different people part of the same institutions

-

we minimise the loss function: \[C(w,b) \equiv \frac{1}{2n}\sum_x \|y(x) -a \|^2 \]

- a good question to ask is why do we minimise this auxillary function and not just the number of misclassified examples?

- the answer to this is that the number of misclassified examples is not a continuous value.

\[\begin{align*} \Delta C &\approx \nabla C \cdot \Delta v\\ \nabla C &\equiv \left( \cfrac{\partial C}{\partial v_1}, \ldots, \cfrac{\partial C}{\partial v_m} \right ) ^T \\ \Delta v &= -\eta \nabla C \\ v&\rightarrow v' = v - \eta\nabla C \end{align*}\]

then replacing \(v_j\) with \(w_j\) and \(b_l\) yields gradient descent

\[\begin{align*} w_k \rightarrow w_k' &= w_k - \eta\cfrac{\partial C}{\partial w_k}\\ b_l \rightarrow b_l' &= b_l - \eta\cfrac{\partial C}{\partial b_l} \end{align*}\]

however, clearly for huge datasets, taking the partial derivatives w.r.t every weight and bias would be computationally infeasible.

thus we average over a smaller number of batches, called mini-batches:

\[\begin{align*} \nabla C &= \cfrac{\sum_x \nabla C_x}{n} \\ &\approx \cfrac{\sum^m_{j=1}\nabla C_{x_j}}{m} \end{align*}\]

and then use those in the gradient calculation instead:

\[\begin{align*} w_k \rightarrow w_k' &= w_k - \frac{\eta}{m}\sum_j\cfrac{\partial C_{x_j}}{\partial w_k}\\ b_l \rightarrow b_l' &= b_l - \frac{\eta}{m}\sum_j\cfrac{\partial C_{x_j}}{\partial b_l} \end{align*}\]

implementation

import numpy as np

import random

class Network(object):

def __init__(self, sizes):

self.num_layers = len(sizes)

self.sizes = sizes

# starts off after the first layer

# each layer has a single bias per neuron

self.biases = [np.random.randn(y, 1) for y in sizes[1:]]

# has y connections for the x number of neurons in each layer

self.weights = [np.random.randn(y, x) for x, y in zip(sizes[:-1], sizes[1:])]

# constructs matrix of weights

def feedforward(self, a):

"""Return the output of the network if "a" is input."""

for b, w in zip(self.biases, self.weights):

a = sigmoid(np.dot(w, a) + b)

# a bias vector is correlated with a weight matrix at each layer

return a

def SGD(self, training_data, epochs, mini_batch_size, eta, test_data=None):

"""Train the neural network using mini-batch stochastic gradient descent. The "training_data" is a list of tuples "(x, y)" representing the training inputs and the desired outputs. The other non-optional parameters are self-explanatory. If "test_data" is provided then the network will be evaluated against the test data after each epoch, and partial progress printed out. This is useful for tracking progress, but slows things down substantially.)"""

if test_data:

n_test = len(test_data)

n = len(training_data)

for j in range(epochs):

random.shuffle(training_data)

mini_batches = [training_data[k:k+mini_batch_size] for k in range(0,n,mini_batch_size)]

for mini_batch in mini_batches:

self.update_mini_batch(mini_batch, eta)

if test_data:

print("Epoch {0}: {1} / {2}".format(j, self.evaluate(test_data), n_test))

else:

print("Epoch {0} complete".format(j))

def update_mini_batch(self, mini_batch, eta):

"""Update the network's weights and biases by applying gradient descent using backpropagation to a single mini batch. The "mini_batch" is a list of tuples "(x, y)", and "eta" is the learning rate."""

nabla_b = [np.zeros(b.shape) for b in self.biases]

nabla_w = [np.zeros(w.shape) for w in self.weights]

for x, y in mini_batch:

delta_nabla_b, delta_nabla_w = self.backprop(x, y)

nabla_b = [nb+dnb for nb, dnb in zip(nabla_b, delta_nabla_b)]

nabla_w = [nw+dnw for nw, dnw in zip(nabla_w, delta_nabla_w)]

self.weights = [w-(eta/len(mini_batch))*nw for w, nw in zip(self.weights, nabla_w)]

self.biases = [b-(eta/len(mini_batch))*nb for b, nb in zip(self.biases, nabla_b)]

def backprop(self, x, y):

"""Return a tuple ''(nabla_b, nabla_w)'' representing the gradient for the cost function C_x. ''nabla_b'' and ''nabla_w'' are layer-by-layer lists of numpy arrays, similar to ''self.biases'' and ''self.weights''."""

nabla_b = [np.zeros(b.shape) for b in self.biases]

nabla_w = [np.zeros(w.shape) for w in self.weights]

# feedforward

activation = x

activations = [x] # list to store all the activations, layer by layer

zs = [] # list to store all the z vectors, layer by layer

for b, w in zip(self.biases, self.weights):

z = np.dot(w, activation) + b

zs.append(z)

activation = sigmoid(z)

activations.append(activation)

# backward pass

delta = self.cost_derivative(activations[-1], y) * sigmoid_prime(zs[-1])

nabla_b[-1] = delta

nabla_w[-1] = np.dot(delta, activations[-2].transpose())

# l = 1 denotes the last layer, l = 2 denotes second last, etc.

for l in range(2, self.num_layers):

z = zs[-l]

sp = sigmoid_prime(z)

delta = np.dot(self.weights[-l+1].transpose(), delta) * sp

nabla_b[-l] = delta

nabla_w[-l] = np.dot(delta, activations[-l-1].transpose())

return (nabla_b, nabla_w)

def evaluate(self, test_data):

"""Return the number of test inputs for which the neural network outputs the correct result. Note that the neural network's output is assumed to be the index of whichever neuron in the final layer has the highest activation.'"""

test_results = [(np.argmax(self.feedforward(x)), y) for (x, y) in test_data]

return sum(int(x == y) for (x, y) in test_results)

def cost_derivative(self, output_activations, y):

"""Return the vector of partial derivatives 'partial C_x / partial a' for the output activations."""

return (output_activations - y)

def sigmoid(z):

return 1.0/(1.0+np.exp(-z))

def sigmoid_prime(z):

return sigmoid(z)*(1-sigmoid(z))

if __name__ == '__main__':

net = Network([2,3,1])import pickle

import gzip

import numpy as np

def load_data():

f = gzip.open('./authors/data/mnist.pkl.gz', 'rb')

u = pickle._Unpickler(f)

u.encoding = 'latin1'

training_data, validation_data, test_data = u.load()

f.close()

return (training_data, validation_data, test_data)

def load_data_wrapper():

tr_d, va_d, te_d = load_data()

training_inputs = [np.reshape(x, (784, 1)) for x in tr_d[0]]

training_results = [vectorised_result(y) for y in tr_d[1]]

training_data = list(zip(training_inputs, training_results))

validation_inputs = [np.reshape(x, (784, 1)) for x in va_d[0]]

validation_data = list(zip(validation_inputs, va_d[1]))

test_inputs = [np.reshape(x, (784, 1)) for x in te_d[0]]

test_data = list(zip(test_inputs, te_d[1]))

return (training_data, validation_data, test_data)

def vectorised_result(j):

e = np.zeros((10, 1))

e[j] = 1.0

return e